“What if patients could see all of their images, whenever they wanted? What would they do with them? What would they need to make that useful?”

MicroLens started with that question and is an exploration of what that would mean for patients and carers, and the different ways it could work, for different staff, patients and carers in our service. We are publishing this to summarise what we have done so far – but also to invite you to contribute! If you are interested in adding your thoughts, please see the link at the end of the blog post.

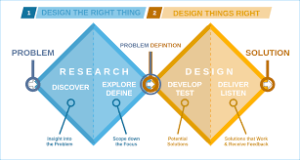

Specifically, we wanted to bring a design-led approach to thinking about some new technology. By that, we mean, instead of jumping in feet first and writing some code, we wanted to explore use and needs, and then let the technology follow, following the general Design-Centred AI Approach that we have outlined here.

Starting point

Historically, patients had images taken (X-rays, ultrasound, CT) at a hospital. These were then reported by a radiologist, and the report went to whoever had requested the imaging. Then the patient came back to discuss the results with their treating team. More recently, we have had the ability to show those images to patients in clinic, so we can explain and demonstrate what the report shows. Over the last few years, some centres now offer routine access to imaging reports for patients through “Patient Portals” which let patients see their blood tests results, letters, reports, etc. We have one of these at Imperial, and it is being rolled-out London wide (https://www.careinformationexchange-nwl.nhs.uk/how-it-works). However, as computers have developed, patients can now easily look at their own imaging at home – taking the images home on CD, and downloading free software to view them.

Technology is currently at a place where patients can look at their own images outside of a clinical consultation but the evidence available shows that there is a mismatch between what patients and users want to what clinicians and medical teams think patients and users need/ want. The MicroLens project is about us taking the time to hear from a wide range of people (patients, caregivers, researchers, multidisciplinary team members (radiologist, CNS, oncologists, surgeons), technical minded people) over a five month period to hear what would be practical, needed, feasible and how they would best use and understand their images instead of starting with the technical aspect. This is part of a wider move in the lab that we explore in this post on Design-Centered AI.

We looked at 6 main areas –

- Current tools being used by patients/ caregivers to view images;

- Functionalities of the current tools/ systems and their accessibility;

- Benefits of having images with the scan reports;

- When should the scans/ images be made available for patients to view?

- Platform – Web based, app or hybrid approach? What security is needed and the sign up and access process;

- Professional/ workforce views

1. Current tools being used by patients/ caregivers to view images;

How do people currently view their images?

- Linking CD player to bigger screen i.e. TV

- Screenshots of scans and viewing them as photos on their phone

- Platforms (e.g. Idonia)

2. Patient feedback on having access to their images:

“This is what it looked like last time and this is what it looks like now and they’re perfectly aligned, and we zoom through it, and you can see from here this is what’s going on.”

“It was me that wrote the comment about putting it on the telly, and I don’t remember the technical technicalities of how we did it, but it was useful being able to put it on the bigger screen with my mum. She was at the time almost 80, so the fact that we were able to view the pictures in a bigger medium really helped to point things out to her.”

3. Patients & caregivers views on images being uploaded on to CDs

“Nowadays many people don’t have access to computers/ laptops that have CD drives so when their images are provided on CDs if they want to view the images, they have had to purchase external CD drives.

“There is a variation across teams and services around the time taken for requesting your scans to receiving the scans on a CD. Having the images on a CD allows you to take your images to other consultations (worldwide) but can also be used to display your images on your TV to explain diagnosis to other family members/ friends.”

“Images that are uploaded onto CDs have no anatomical signposting nor do they have information regarding which slices are the most appropriate to view or what is actually normal vs abnormal. Patients find it difficult to interpret the imaging as they don’t have the training nor the knowledge for this. The current process doesn’t allow for you to compare serial images at a time and each image needs to be looked at individually.”

4. Platforms patients use to view images

We also discussed the use of a platform called Idonia (https://idonia.com/access/login) – designed to host medical image imaging. You register for free and access the platform and can then view your images. If you have your images on CD you can drag them into the platform so that all your images are stored in one place. You have the ability to share your images on the platform by sharing a pin code with no geographical limit. You are able to send images via email and the platform already has some in built tools that allow you to view the images in more detail and you can also store medical history on the platform too and make notes on the images. It can act as a repository for all your images to date. The disadvantage of such a platform is that the NHS trust or treating hospital needs to purchase the rights to use Idonia therefore limiting its outreach to all patients.

5. Advantages and Disadvantages of different ways that are currently been used to view images outside of clinical appointments

Overall having a type of platform to view images is more practical than CDs as it avoids the variability in time to access images across services but also avoids the issue around requiring a laptop/ PC with a CD drive and the relevant software to then view the images. A platform allows for the user to add personalised notes and share with a wide range of people quickly and safely and could act as a form of repository for the scans to date.

- Functionalities of the current tools/ systems and their accessibility;

The group discussed the reasons that they would access their images outside of appointments and reasons and purposes to do so included: sharing images to other clinician’s, viewing scans for own record/ understanding, explaining diagnosis with family members/ friends, and having a digital “photo album”. THis highlighted that a tool would need to be personalised to a range of different needs but would need the common functionalities including ability to share images, place to store screenshots of images and key anatomical points.

- Benefits of having images with the scan reports;

Almost all the people present at this meeting agreed that having some notes/ reports alongside the images are as beneficial as the image itself as it would help them to navigate what the area of concern is. There was a discussion around if the imaging team would be able to provide an additional imaging report which summarised the scan was written in lay terms so that it was accessible for all. We also discussed the idea that if an area was mentioned on the report could that be highlighted on the scan or an arrow to indicate that region.

“ For me the imaging report is equally as important. Images are all well and good, but the radiographers radiologists report is words are very important images to the uninitiated can be a dangerous thing, so it needs to be teamed up with something.

- When should the scans/ images be made available for patients to view?

The response to the questions was split amongst the group with some people wanting to have their images prior to the consultation whilst others would rather the images became available after consultation

For those that wanted the images prior to their consultations the felt the benefits were:

“Able to come up with questions to ask my consultant rather than having to think of them in real time”

“Gives me time to process images as opposed to being in shock and not fully understand what is being said to me. By seeing them earlier I feel less anxiety and feel more in control. I think if I’ve already seen them, I could be more prepared.

For those that wanted the images after their consultations the felt the benefits were:

“There was less anxiety or cause for concern between viewing the image and knowing the next steps in treatment therefore I would prefer for the MDT to decide on management and then for me to view scans at consultation and then know the next steps. I would however like to view my scans for a historical catalogue basis. I wouldn’t necessarily need to have that information before the appointment because I believe that would just fundamentally put a lot more stress on the team and myself. The purpose of having our images is not so that we go diagnosing ourselves, but it would be nice to have access to the images and information after you guys have done your work.”

- Platform – Web based, app or hybrid approach? What security is needed and the sign up and access process;

The group felt that a hybrid process would be the best type of platform for patients to access images remotely as it would allow for them to access the full series of images via the web but then view snapshots of images using an App. The patients and caregivers felt the advantage of access to images via the web allowed for easier access to comparing scans but also allowed for the images to be displayed on a TV screen to share with other family members. The benefits of the app were that it was convenient and allowed for quick access to images.

Again, the viewpoints of the group were variable with some of the group opting from a dual authentication process with others saying that is not needed and a sign-up process like Patient Know Best/ the NHS app being sufficient. There was also discussion around how should the other family members get access to the images – should the patient share access to their images through the platform or should only one access route be provided, and family members share the log in.

6. Professional/ workforce views

There were concerns around the extra workload that may arise as a result of patients/ caregivers having access to their own images. The imaging team provided some feedback from their experiences when patients/ caregivers have had access to images.

“Some patients end up viewing their images and get themselves all worked up and get a lot more concerns as what is normal anatomy is perceived as something abnormal to them”.

“Other occasions where patients have access to their images and imaging reports and the reports states the scan is normal but then patient sees something “abnormal” so as a team we have to go back to radiologist to get to the bottom of what is actually the correct situation”.

What we have learned so far

I have spent the last 5 years in a range of roles across Imperial College and Imperial College NHS Trust, working in and around brain tumour patients. Across that, I have become more and more interested, and involved in, Patient & Public Involvement (PPI). Some of that has felt difficult, and at times scary, but as a result I now lead most of the neuro-oncology PPIE work at both IC and ICHT.

From this project it has helped us to understand that a tool for imaging is not going to be a one size fits all but that it will need to have elements of individualisation. It will be important to be able to provide tools within the platform that helps patients and caregivers to navigate through their own images and make some sense of them. The ability to link the scan to the image, highlighting key areas would also be of great value. One of the key concepts raised by most people that attended these groups is that this platform should at the minimum provide them with a chronological order photo-album of their images so that they can have it for their reference.

Following our PPI sessions, we were lucky enough to talk our ideas through with Rachel Coldicutt from Careful Industries (https://www.careful.industries/).

This helped us critically think about our project and asked us useful questions such as: “How they think they will use it? And how they then actually use it?”

She also covered the concept of “Object” and what this means – no judgement and without emotion – allows patient/ caregiver to add the contextual and emotional aspect to it

She was able to provide us with a different outlook and prospective of how to view the project and the next steps: For instance, provide the patients with access to scan/ images and ask them to provide daily input (voice memos/ journals/ thumbs up/down) and then follow up with a face to face/ virtual feedback on how they use it and why they rated it the way they did. This approach allows for more insightful feedback and sensitivity as it is hard for someone to comment on something until they actually have it or have used it. One of her really useful examples was around the idea of imaging in pregnancy, and what that means to expecting mothers and the effect of these scans and images on those who unfortunately experienced a miscarriage.

NOTE: This post was written by our PPIE lead, Lillie Pakzad-Shahabi; it is being posted by MW for technical reasons

How to get involved

We would love to hear your view on what you would like from a tool that would give you access to your images. Please complete the survey https://imperial.eu.qualtrics.com/jfe/form/SV_8zRCUEyZjgkJ3NA and if you would like us to send out a design box so you can get creative and go back to basics to create your “ideal platform” please email me and I would be happy to arrange for a box to be sent out to you (l.pakzad-shahabi@imperial.ac.uk).

Read Introducing MicroLens: Using a design approach to understand what brain tumour patients want from their images in full