Many will be wishing to discover an Xbox-shaped gift glittering under the Christmas tree this year. Aside from the seemingly endless hours of entertainment, joy, frustration and competition that these consoles offer, Xbox technology – and other similar gadgets – is finding uses outside of the gaming world, and in the healthcare research sphere.

Originally developed as a gaming accessory, Microsoft’s Xbox Kinect uses cameras and sensors to map a space in 3D. Combined with its motion capture capability, this means the tech can not only pinpoint objects, but it can track individuals’ movements and gestures. These are both key functions that could be useful in an array of healthcare settings, as research by our Hamlyn Centre and others is demonstrating.

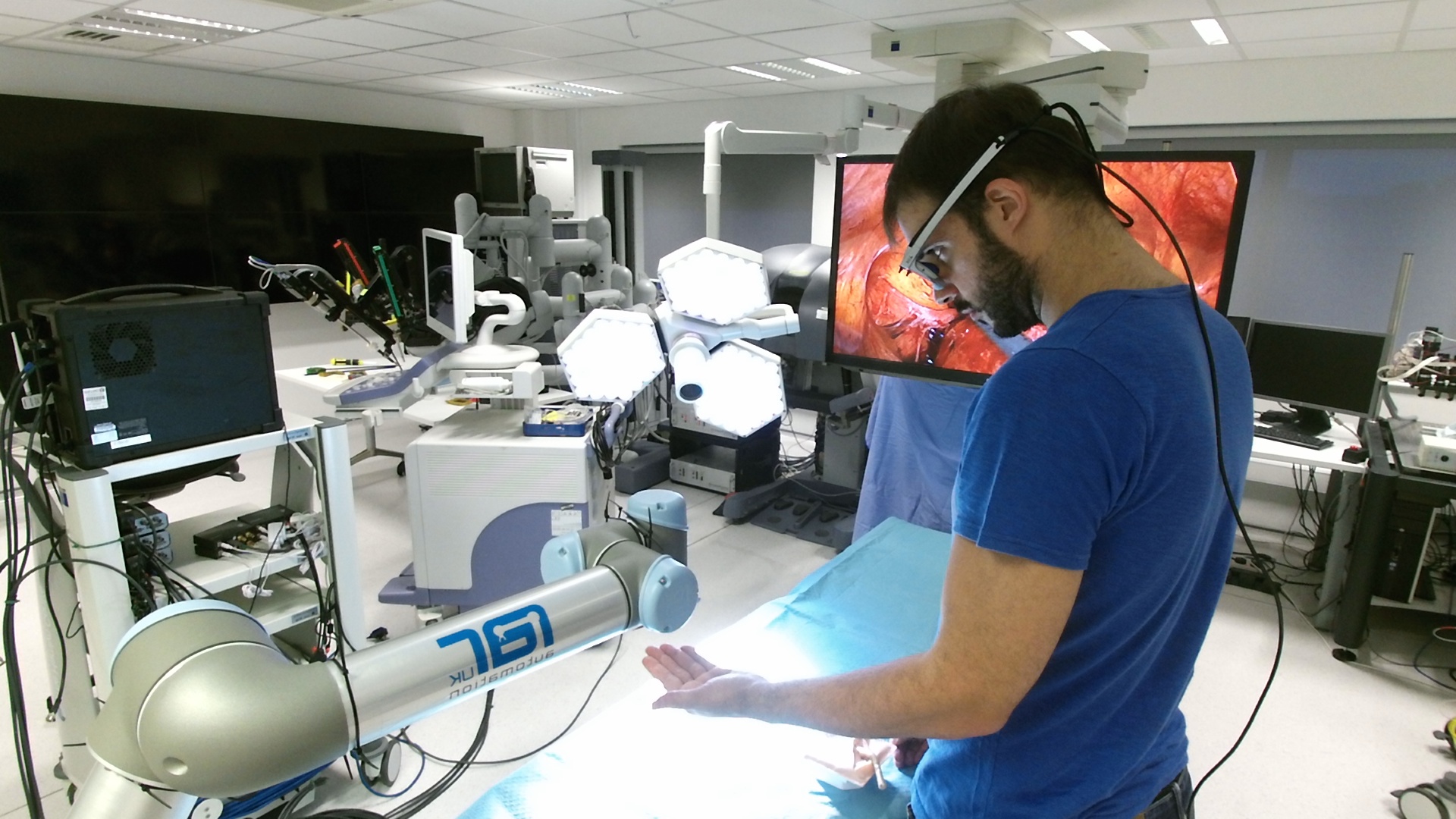

Led by Dr George Mylonas, Hamlyn Centre’s HARMS lab focuses on using frugal innovations, coupled with techniques based on perception, to develop low-cost healthcare technologies, largely in the surgical setting. That’s where the Kinect fits in, explains HARMS lab researcher Dr Alexandros Kogkas.

“Xbox offered one of the first commercial 3D cameras on a large scale; the technology has been well-known for many years now,” he says. “It was made available at scale because of gaming, and that’s why it’s cheap – one Kinect costs just £150, so it’s relatively low-cost to deploy.”

Xbox technology for safer surgery

One of the major ways that the lab is using Kinect technology is to ease the burden of surgeons. Performing surgery is mentally and physically exhausting. Prolonged stress means that as many as 50% of surgeons can experience burnout. Not only does this risk the surgeon’s health and wellbeing, but that of their patients, too, because of the higher likelihood of mistakes.

“Our goal is to use the technology to make surgery safer,” says Kogkas. “Whether that’s by improving ergonomics, enabling better collaboration, or boosting surgeons’ skills.”

Kogkas and colleagues are working on a system that can both facilitate interactions in the operating theatre and also track patterns of behaviour for analysis.

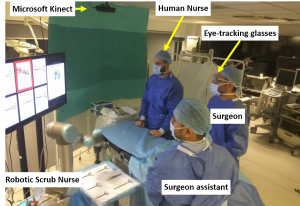

For instance, helper robots have been developed to assist surgeons during procedures, which can recognise, locate and handle instruments as required. These “robotic scrub nurses” are designed to complement real scrub nurses, who often have to juggle many jobs at once and therefore may not be able to assist with delivering surgical tools as quickly as necessary.

Using Kinects to map the room and its contents, combined with eye-tracking glasses to record the physician’s focus, their system allows surgeons to control the robotic scrub nurse using only their gaze. This means they can effortlessly focus their eyes on the instrument they need, and the robot will deliver it. Check out the video below to see this in action.

“So far we’ve tested this system on mock surgeries performed by real surgeons and scrub nurse teams, comparing how surgeons completed the task with and without the gaze-controlled scrub nurse,” says Kogkas. “We didn’t find any major differences, which means that the system is feasible and doesn’t interrupt workflow. But we want to see an improvement in task performance, so we have more work to do.”

Building a smarter system

The system also allows surgeons to use gaze to scan through on-screen patient records, such as imaging results used to guide the procedure. This would mean surgeons don’t have to leave the room to examine the data and then re-sterilise on return, potentially reducing the length of the surgery.

“But ultimately our main goal is to make the system more intelligent,” Kogkas says. “By learning common sequences from surgeons, we want to develop algorithms that can predict the next task and prompt the robot to act accordingly.

“This is where human scrub nurses are really indispensable; they can anticipate what the surgeon needs from experience. We want to train robots to do the same.”

Preventing errors in the operating theatre

Robots aside, learning from the data generated by the system could help surgeons in other ways, too. By tracking metrics like blinking rate, head position and body posture, the researchers hope to develop artificial intelligence algorithms. These could detect when surgeons are experiencing fatigue, or objectively quantify effort, for example. This could then prompt a programmed response, such as brightening the lights to stimulate wakefulness, triggering an alert for colleagues to intervene, or suggest a raised level of alertness among the theatre team.

“We hope our framework could be applied in ‘Surgery 4.0’ – data-driven surgery,” explains Kogkas. “Ultimately, we want to be able to use our data to help predict errors before they occur, and find ways to intervene to stop them from happening.”

This will likely require much more information than the current system divulges, though. So the group has begun collaborating with other institutions, including Harvard Medical School, to integrate more sensors. This could include reading brainwaves through an EEG cap and measuring heart rate or other indicators of stress.

“We hope to see our framework deployed soon in operating theatres,” Kogkas says. “Although we developed it for surgery, we realised we could apply this framework in other settings as well. That’s when we began our work on assistive living.”

From hospital to home

For people with severe disability, such as tetraplegia, gaining a degree of independence could be life-changing. Kogkas and colleagues hope to be able to offer that through their work. They’re using their framework to develop a robotic system that can assist with daily living activities for people who have lost the ability to use their limbs.

Similar to the operating theatre setup, the researchers are training algorithms for robots so that they can recognise and locate objects found in the home environment, such as a mug or box of cereal. An installed Kinect or similar sensor can then track objects in a person’s room, so that their gaze can be mapped onto them through eye-tracking glasses.

A person could, therefore, use the system to direct a robot to carry out various tasks, such as moving objects around or pouring a bowl of cereal. You can see this in action in the YouTube video below:

“This is a big, complex project, in part because there are so many different tasks that need to be programmed,” explains Kogkas. “It’s one thing being able to recognise and move a coffee cup, but to be useful the robot also needs to bring it towards the person’s lips so they can drink from it.

“Other labs are developing inventories of different daily living activities, so we hope that we can collaborate to take this research forward to the next phase.”

But progress has already begun. Having tested the system successfully in healthy volunteers, Kogkas and colleagues have now been granted ethical approval to begin trialling the technology in patients for the first time. The study is due to commence next year.

“For us, it’s important to do translational research,” he says.

“That means we don’t only care about developing something to publish in an academic journal. We want to actually see it used in the real world, to help people and improve the quality of healthcare.”