Imperial’s Dr Sam Cooper briefs Parliamentarians

From climate modelling to drug discovery, AI is reshaping science by accelerating data analysis, enhancing modelling, and introducing new forms of automated discovery. With the UK government expected to publish an AI for science strategy imminently, this year’s Evidence Week was an opportune time to meet with Parliamentarians to discuss what policies and investment is needed to accelerate AI-enabled scientific discovery in the UK.

Evidence Week is the flagship annual science engagement event of the charity Sense About Science. The week is built around a series of events designed to bridge the gap between scientific research, the policymaking process and the public. At this year’s event, Dr Sam Cooper, Associate Professor of Artificial Intelligence for Materials Design, took his team to Westminster to brief Parliamentarians on how AI and robotic laboratories can reshape the UK’s innovation landscape and accelerate progress in clean energy and advanced materials.

Three key takeaways

- LLMs can accelerate and unify exploration across scientific domains. In a 2024 paper Dr Cooper and colleagues found that Large Language Models (LLMs) can extract experimental parameters and insights from scientific literature with up to 90% accuracy, reducing the manual burden on researchers and accelerating hypothesis generation.

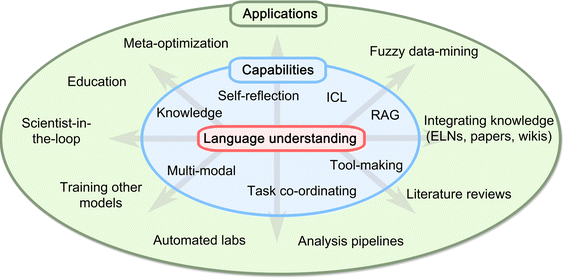

Diagram of LLM capabilities and potential materials-science related applications as seen in G. Lei , R. Docherty and S. J. Cooper , Materials science in the era of large language models: a perspective, Digital Discovery, 2024, 3 , 1257 —1272 https://pubs.rsc.org/en/content/articlehtml/2024/dd/d4dd00074a - Combining AI with robotics is key to solving the reproducibility crisis in science. Traditional lab work is slow, inconsistent and hard to reproduce. Automated labs like Imperial’s DIGIBAT project generate reliable, high-quality datasets that allow AI models to test and learn faster—making science more trustworthy and efficient.

- Infrastructure investment is vital to stay competitive. Large language models (LLMs) and other AI systems can revolutionise materials R&D, but only if they’re trained on data produced by automated labs. Public investment in compute, robotics and open data will help the UK remain a global leader in frontier science.

From research to policy

Dr Cooper’s discussion with Parliamentarians focused on how policymakers can create the right environment for responsible AI-enabled science. He outlined three priority actions:

- Fund national-scale robotic science facilities. Treat high-throughput automated labs as critical infrastructure—on par with supercomputers or particle accelerators—to generate the reproducible datasets that drive innovation.

- Establish a UK-wide materials data platform. Develop open, standardised databases so that data from robotic labs can be shared, reused and used to train AI models across academia and industry.

- Expand AI talent pipelines. Support doctoral programmes, cross-sector apprenticeships and retraining initiatives that blend materials science, machine learning and automation.

Real-world impact

Automating the design, execution and analysis of experiments can compress research cycles from months to weeks. By pairing robotics with AI, scientists can explore thousands of potential materials faster and more reliably accelerating breakthroughs in batteries, catalysts and sustainable manufacturing.

In 2024 Dr Cooper spun-out a company from Imperial. Polaron uses generative A.I. to design and optimise the microstructure of materials (such as battery electrodes) — enabling manufacturers to explore thousands of variants in days instead of years.

Polaron’s models have shown over a 10 % boost in battery energy density, and their technology is also finding application in pharmaceuticals, composites for wind turbines, alloys for jet-engines and even food-texture optimisation.

Looking ahead

It was fantastic to hear Parliamentarians ask thoughtful questions about access to facilities, data sharing and how regulatory frameworks should adapt to AI-driven experimental work. Parliament will continue to play a crucial role scrutinising whether the UK government’s actions are realising the potential of the UK’s existing strengths in AI for science. By acting now—through establishing national facilities, open data infrastructure and talent programmes—the UK can secure its position as a world leader in AI for Science and deliver the materials and technologies that underpin a sustainable future.

To learn more about how AI is revolutionising scientific discovery across Imperial, please contact George Woodhams.

Among them are technical leaders driving technology adoption and data analysis, as well as senior policy and strategy officials tackling some of the country’s most pressing policy challenges.

Among them are technical leaders driving technology adoption and data analysis, as well as senior policy and strategy officials tackling some of the country’s most pressing policy challenges.