This post is authored by Yusuf Ozkan, Research Outputs Analyst, and Dr Hamid Khan, Open Research Manager: Academic Engagement.

Researchers increasingly use social media to communicate their research. They share links to journal articles, but also other types of output like preprints, graphics/figures and lay summaries.

That enables us to measure alternative indicators of research visibility beyond citations of, and in, journal articles. With many services like X, Mastodon, Threads and LinkedIn, researchers and the public are scattered across the social media world, which makes tracking visibility difficult. Bluesky has joined the club recently and is growing rapidly. In this post, we highlight how research-related conversations and citations of Imperial outputs have increased on Bluesky, emphasising the value of using the Library’s tools to track citations on social media.

Although Bluesky is a relatively new platform – launched in 2023 as an invitation-only service – it has reached nearly 30 million users at the time of writing. The number of users increased by seven million in just six weeks from November 2024.

Many people have migrated from X (formerly Twitter) to Bluesky during this period, partly following the US election, but the reasons for migration are not limited to politics. Bluesky also surpassed Threads in website user numbers. The rapid increase in users and growing trend of researchers joining Bluesky is making it an increasingly convenient forum for research conversations.

Given the increase in users, we would expect to see research outputs being shared more widely on Bluesky. But it is extremely difficult, if not impossible, to manually measure that. This is where Altmetric comes into play, to track mentions of outputs.

Altmetric is a tool for providing data about online attention to research by identifying mentions of research outputs on social media, blog sites, Wikipedia, news outlets and more. Altmetric donuts and badges display an attention score summarising all the online engagement with a scholarly publication. Altmetric can be useful to show societal visibility and impact, though its limitations should also be kept in mind. Imperial Library has a subscription to Altmetric. We can use Altmetric to see how social media users interact with Imperial’s research outputs. It’s one of many tools we use to support researchers to move away from journal-based metrics for evaluating the reach of their work.

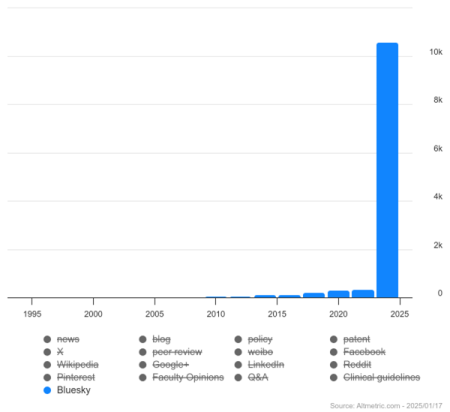

The migration of researchers away from X prompted Altmetric to start to monitor emerging platforms, leading to the inclusion of Bluesky in Altmetric statistics in December 2024, although the platform had been picking up citations on Bluesky since October.

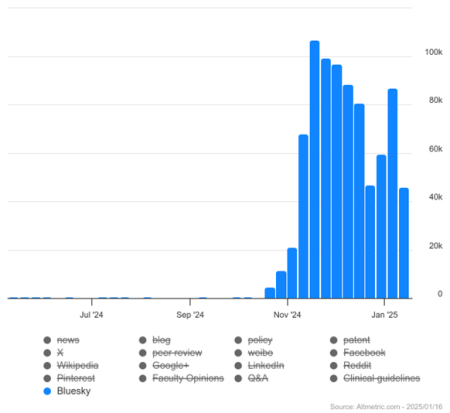

There are nearly 400 thousand Bluesky posts citing a research paper from late October to mid-January – less than just three months, which is a significant milestone considering it took Twitter nine years to reach 300 thousand posts linking a research paper.

Bluesky is a rising star for research conversations online, but what is the situation when it comes to mentions of Imperial research outputs? Well, the trend is no different from the overall picture.

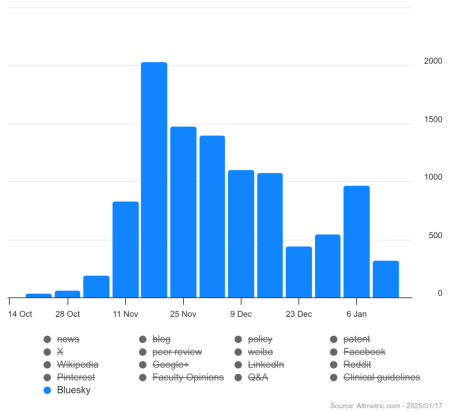

Altmetric identifies over 11,000 mentions of publications on Bluesky associated with Imperial authors from November 2024 to January 2025. The number of Imperial output mentions on X is four times higher than Bluesky for the same period. Given that Bluesky has been launched recently and has ten times fewer users than X, the figure is still substantial.

The mentions of Imperial publications on Bluesky followed a similar trend to the overall mentions of research outputs on the platform. There was a massive uptick in mid-November 2024, taking the number from a few mentions to thousands per week. Although the number of mentions appears to be coming down, the increasing number of overall Bluesky users and posts suggests citations are not likely to return to their pre-November level.

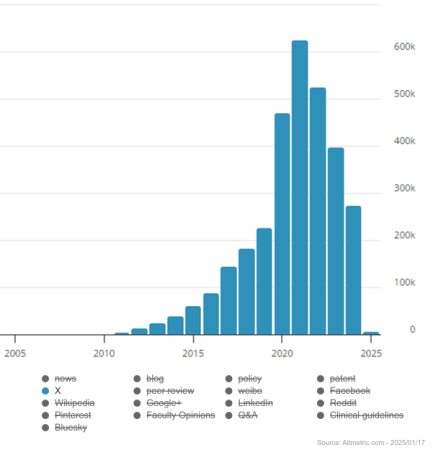

Comparing mentions on Bluesky with X for all time gives us another perspective on how sharing practices have changed. The number of X mentions for Imperial outputs has consistently decreased since 2021 from 620K mentions to 270K in 2024. If this trend continues, we expect to see just over 100K mentions in 2025.

Even though Bluesky is just two years old and Altmetric have been including mentions on the platform for three months, the volume of mentions is impressive.

Bluesky is a new social media platform whose users are increasing. The volume of research-related conversation on Bluesky has increased since October 2024, making it the second-largest data source tracked by Altmetric over the past three months. Imperial research outputs are widely shared on the platform, too, with the citation of over 10K for the same period. But there is a note of caution.

Social media is great for increasing visibility and reach. It can be a good way to encourage open and collaborative peer review, and ultimately help improve quality and impact. However, metrics provided by platforms like Altmetric can be misleading, as they don’t track everything happening on the internet. For example, Altmetric only includes historical data for LinkedIn. Current mentions are not tracked despite the presence of many researchers on LinkedIn.

Social media platforms have some biases, such as vulnerability to manipulation and gaming (just like the Journal Impact Factor), imbalanced user demographics, and either over- or under-representation of an academic discipline on one platform. Counting citations is a risky business, because social media mentions do not necessarily point to positive impact or high quality. Someone could be critiquing or rebutting your work in citing it. Despite limitations, diverse platforms for sharing research are good for discoverability, since one user of a platform may not use another. This increases the potential impact of research by reaching diverse audiences. Bluesky is a recent and promising example, demonstrating how emerging platforms can broaden the reach and visibility of research publications.

To see how your research is being seen and cited on social media, you can make use of the Library’s subscription to Altmetric. Get in touch with the Bibliometrics service to discuss ways to measure the visibility and impact of your work other than the flawed Journal Impact Factor.

Note: This post was authored in mid-January. Therefore, some of the figures might have changed by the time of publication.