Despite claims to the contrary, open access as such is not very complicated. Either publish your scholarly output with a publisher who will immediately make it available as open access, or put a copy of the (peer-reviewed) manuscript in a repository. What makes open access complicated is the myriad of policies that regulate it.

The Registry of Open Access Repository Mandates and Policies (ROARMAP) alone lists way over 700 OA policies – just from research organisations and funders. If you add publisher policies it gets even more confusing. As a sector we often complain about the difficulties publishers create with journal embargoes. We are also criticising funders for not aligning their policies. These criticisms are valid, but we tend to gloss over that universities are not always aligning their policies either. Policies that vary across universities make it more difficult for third parties to provide solutions as they need to map onto a wide range of workflows resulting partly from different policies. Different institutional policies also make it harder to communicate open access to academics.

I have on a few occasions suggested that we should aim to align institutional policies more, and that we should also simplify them. Thankfully, I am not the only one thinking about this. Jisc, SHERPA Services and ROARMAP have jointly developed a Schema for Open Access policies. The schema should help policymakers “to express their policies in a systematic manner”, as “an initial step to ensure greater clarity and uniformity in the way information about OA policies is recorded and made available”. Imperial College was one of 30 institutions that provided information to the new initiative. You can read more about the schema, initial findings and how to engage on the Jisc blog.

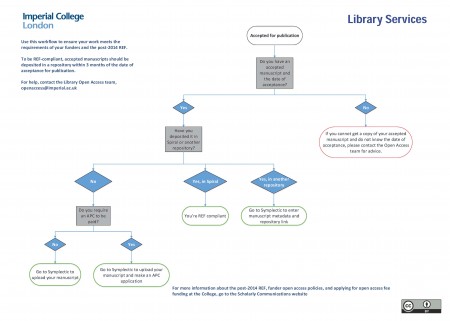

My ideal would be that over time we move to a single open access policy, or at least to a core policy to which institutions can add a selection of clearly defined elements to reflect their specific needs – where this is really necessary, of course. In the UK we do already have what could be considered the core of an OA policy, the Policy for open access in the post-2014 Research Excellence Framework. Leaving the details aside, the policy requires deposit on acceptance (for publication). Currently it only applies to scholarly articles and conference proceedings, but I would argue that that makes it ideal as a starting point as these more formalised outputs (compared to e.g. performances) are easier to deal with across institutions.

Therefore, my suggestion for a minimal universal OA policy would be:

- Publish in the journal of your choice, including full open access journals (subject to availability of funding).

- Deposit a copy of the peer reviewed manuscript of your journal article or conference proceeding into a repository on acceptance for publication.

Incidentally, that is effectively the OA policy at Imperial College. As the vast majority of College publications are articles or conference proceedings we can effectively limit the policy to these, at least for the moment. An institution with a more diverse range of outputs may decide to add monographs, videos, websites etc., and those who cover costs for hybrid open access (Imperial’s own fund does not support it) may want this included as well.

I fully understand that just two bullet points will not be enough. However, I would like to put out a challenge: look at your institution’s open access policy and think about which elements you really need, and how you could simplify it in a way that would help us moving towards a universal policy. And make sure to check out the schema!