Dr Tim Hoogenboom, a Research Sonographer, looks at the promise and perils of machine learning in medical imaging.

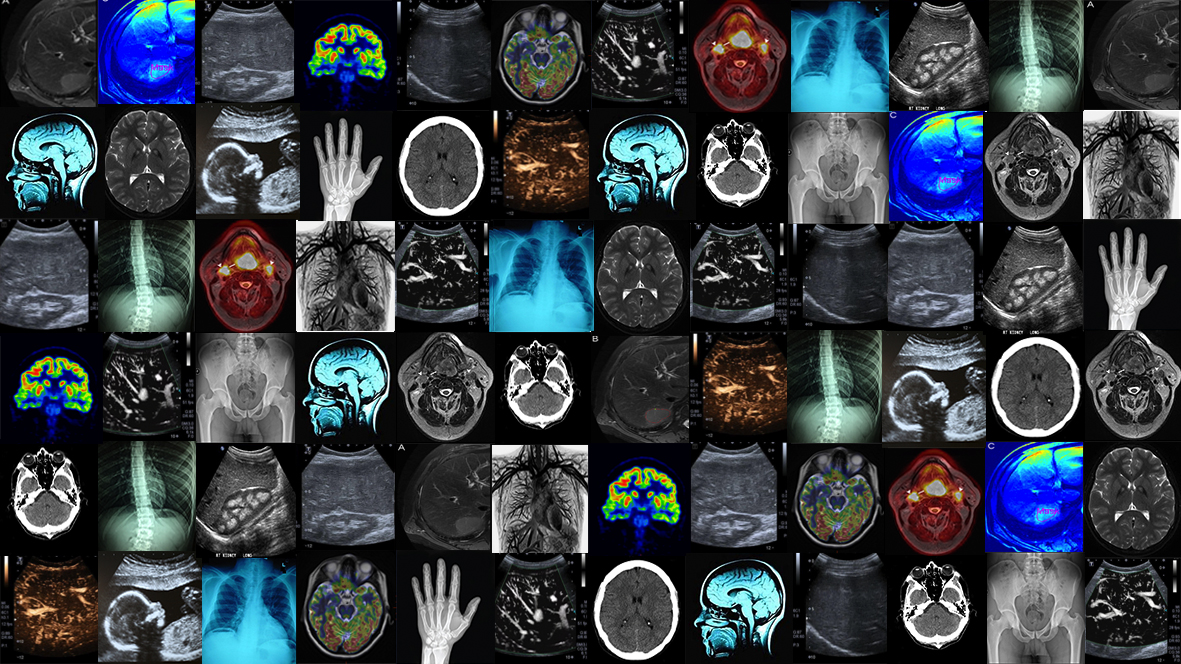

Medical imaging is key in today’s delivery of modern healthcare, with an immense 41 million imaging tests taking place in England in every year. Thousands upon thousands of patients safely undergo imaging procedures such as X-ray, ultrasound, and MRI every day, and the product of these tests – the images – play an essential role in informing the decisions of medical professionals and patients in nearly every area of disease.

At its core, medical imaging is the application of physics, and sometimes biochemistry, to visually represent the biology and anatomy of living humans. We have progressed from the first, blurry, x-ray in 1895, to being able to measure minute changes in oxygenation within the brain; whilst major technological advancements continue to be made every year. In the field of medical imaging, these techniques are applied to expand our understanding of the human body and disease in research settings, but much of this technology does not actually make it into every day clinical practice. For me, this has been the drive to move from a career in sonography into clinical research: to implement novel technology and investigate how it can be used to improve patient care.

One of these advancements is the use of image analysis technology to obtain more information from medical images. There has always been an interest in the use of computers to analyse medical images as computers are not biased by optical illusions or experience like human readers are. In image analysis, an image is no longer considered as visual, but rather as digital information. Each pixel contains a value representing biophysical properties, and you can write a program that finds a specific pattern or feature across the image that can represent disease. However, this process is time-consuming, and a single feature probably doesn’t represent a disease very accurately.

Machine learning – a type of artificial intelligence (AI) which gives computers the ability to learn without being explicitly programmed – could be the solution to this problem. If you feed a machine algorithm sufficient data, it will find the relevant patterns for you, and teach itself which ones are important clinically! The quality of a machine learning algorithm is highly dependent on the amount of data you put into it. With medical images being one of the fastest growing data sources in the healthcare space, we are now reaching the point where image analysis will soon see widespread use in the clinic. We could see similar algorithms to the ones Google uses to determine what ads to show you, or the ones Amazon uses to tell you what products to buy, telling your doctor if you have cancer.

The potential benefits are numerous, life-saving, and almost certainly cost-saving. Imagine the problem of a rare disease, which a trained radiologist might encounter once in their professional life. Machine learning could be applied to images obtained around the world to develop an algorithm that can diagnose a new patient within seconds. With every new patient added, the algorithm will learn and improve in accuracy.

Measuring response to chemotherapy treatment could be done in seconds by segmenting the size of every cancerous lesion almost instantaneously, and measuring whether patterns within them have changed from baseline. Currently, a radiologist has to view hundreds of images to achieve a similar, but ultimately subjective, result.

However, for various reasons, transferring image analysis and machine learning from research into the clinic is difficult. There are significant safety concerns with the use of new measurements to guide clinical decision making. Image analysis allows us to examine things in ways we couldn’t before, but the patterns a computer detects might not always make sense to us. Furthermore, the selection process of machine learning algorithms is still poorly understood, how does it decide what is important and what isn’t? Do we want to use technology if we don’t understand exactly what aspect of biology it is measuring?

There are also legal and ethical concerns that need to be considered. Who is responsible if a computer error causes a tumour to be missed or misidentified, or if a patient is bumped up the transplant list by mistake? Do we tell patients about all the abnormalities they have, even if there is no treatment (or need) to change them?

Companies might withhold investments until they are assured that they will not be held legally accountable if their software makes a mistake. Should they be? Hospitals are increasingly aware that their image archives are of tremendous value to the developers of these techniques, but there are obvious ethical concerns with them sharing images of your organs for commercial gains. The recent WannaCry hacks which devastated NHS IT systems also raise another concern; if medical images hold important health information that can be extracted by anyone with sufficient computing power, will this become a target for hackers? Insurance companies?

Currently, images used to develop and test image analysis and machine learning algorithms mainly come from clinical trials. We have certainly reached the point where any study that collects images as a part of their follow-up or assessments should ask their participants if these images can be stored for image analysis studies. For large studies, ethics committees should ask if it is appropriate for a study to be green-lighted if there isn’t an image analysis component considered as part of the main study design.

In my research within the Centre for Digestive Diseases, I use image analysis to describe vascular patterns in the liver of patients with fatty liver disease, cirrhosis, and liver cancer. The aim is to eventually develop a method that can be used in everyday clinical practice to find those patients most likely to progress in their liver disease or develop liver cancer, so that they can be treated early. Fatty liver disease affects approximately a quarter of the global population, and it can take up to 20 years before someone starts to exhibit symptoms. We need methods that can find those patients most in need of treatment before they develop liver failure or liver cancer. I believe image analysis can help us achieve this by gathering more information from the scans we obtain so regularly.

The problems of introducing artificial intelligence and image analysis in the clinic are numerous, but I think it is important to view them as a challenge to overcome, rather than something to fear and avoid.

Tim Hoogenboom is a Research Sonographer based in the Division of Integrative Systems Medicine and Digestive Diseases. He gained clinical experience as a medical imaging and irradiation expert in the Netherlands before transferring into translational research at Imperial in 2013. His current research is funded by the Imperial Health Charity and the RM partners Accountable Cancer Network in partnership with the NIHR Imperial BRC.

Tim Hoogenboom is a Research Sonographer based in the Division of Integrative Systems Medicine and Digestive Diseases. He gained clinical experience as a medical imaging and irradiation expert in the Netherlands before transferring into translational research at Imperial in 2013. His current research is funded by the Imperial Health Charity and the RM partners Accountable Cancer Network in partnership with the NIHR Imperial BRC.

Following the launch of the Faculty of Medicine’s reorganised academic structure on 1 August 2019, this post was recategorised to Department of Metabolism, Digestion and Reproduction.

Super cool article!

I absolutely agree, that machine learning will help us to better understand medical images, and that using the technology will definitely save lives. Nevertheless, there is always a margin of error, in particular, when it comes to new diseases, as ML will only learn from past data. I’m a bit nervous about that.